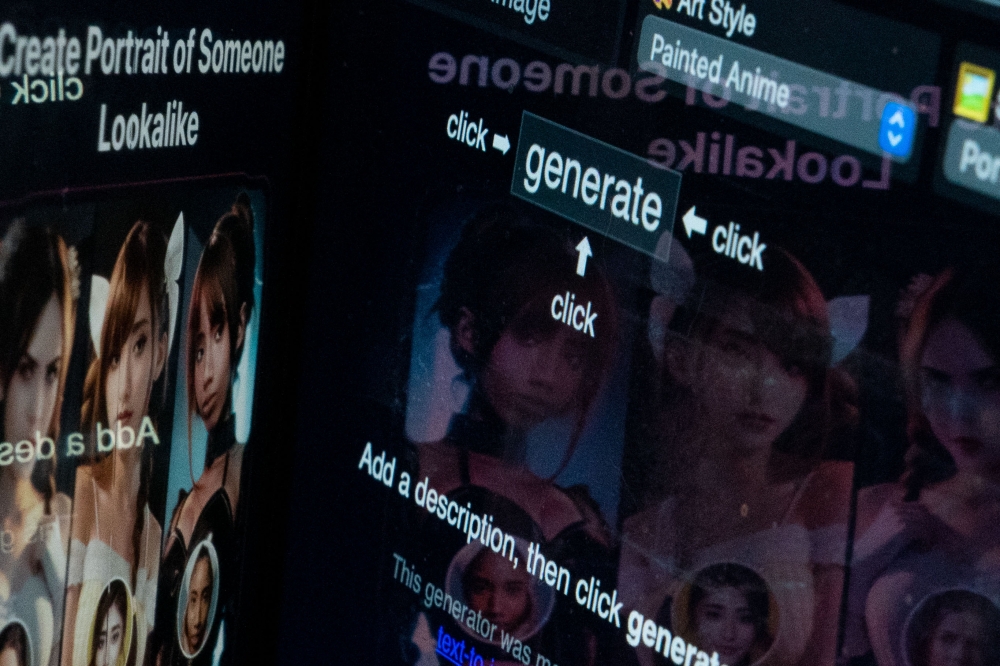

KUALA LUMPUR, July 18 — In today’s digital world, seeing is no longer believing.

With Artificial Intelligence (AI) becoming increasingly sophisticated, fake videos, audio clips and images that look and sound eerily real known as deepfakes are emerging as one of the biggest threats to truth and trust online.

According to David Chak, co-founder and director of Arus Academy, which runs media literacy education programmes across Malaysia, deepfakes are no longer just a futuristic fear.

What is a deepfake?

Chak explained that deepfakes are highly realistic videos, images or audio recordings created using AI, specifically machine learning.

He said these tools are trained to imitate a person’s appearance, voice or mannerisms using existing footage or recordings so convincingly that they can trick even the most tech-savvy viewer.

“For example, with enough audio of Prime Minister Datuk Seri Anwar Ibrahim available online, AI can generate a deepfake video of him saying something completely fabricated whether that’s a political statement or something as absurd as endorsing Oreo biscuits.

“These tools can be used for creative or entertainment purposes, but in the wrong hands, they are powerful tools of deception,” he told Malay Mail.

Main types of AI-generated content

Chak highlighted three main categories of AI-generated fake content:

1. Fake visuals (deepfake videos)

Videos of someone appearing to do or say something they never did. One disturbing example is deepfake pornography, where someone’s face is placed onto explicit content without their consent.

2. Fake audio

AI can mimic someone’s voice based on publicly available recordings. It is now possible to generate phone calls or voice notes that sound exactly like a politician, celebrity or even a loved one.

3. Combined audio and visual

When visuals and audio are merged into a single synthetic video, the result can be indistinguishable from real footage.

These are often disguised as breaking news or public announcements to manipulate emotions and spread misinformation.

How to detect AI-generated content

Also weighing in on the same matter, AI researcher with the Malaysian Research Accelerator for Technology and Innovation (MRANTI) Dr Afnizanfaizal Abdullah said there are a range of techniques to identify content that has been manipulated using AI.

1. Unnatural blinking and facial movement

One common giveaway is how a person blinks.

In real life, people blink around 15 to 20 times per minute with slight variation, but deepfakes may show unnatural blinking patterns or none at all.

Changes in facial features from one video frame to the next may not align with natural human movement, making the footage appear subtly off.

2. Facial asymmetry and visual inconsistencies

Minor imbalances in facial symmetry especially around the eyes and mouth can indicate manipulation.

Deepfake videos often contain visual flaws, such as noticeable differences in image quality between the face and background caused by unusual compression.

Edges around the altered parts of the face may look poorly blended or unnatural.

3. Lighting and shadow mismatches

Lighting inconsistencies such as mismatched shadows, highlights or reflections can make the video appear unrealistic.

4. Frequency and noise anomalies

Deepfakes can leave behind unusual frequency patterns in both the audio and video signals.

Artificial clips often have different background noise or grain compared to authentic recordings, which can be detected through technical analysis.

Existing detection tools struggling to keep up

Although detection tools are improving, Afnizanfaizal said they are still struggling to keep pace with increasingly sophisticated AI.

He cited research showing that detection accuracy can drop from 90 per cent to below 60 per cent after a video is forwarded or reshared several times.

He then explained that commercial tools such as Sentinel, Reality Defender and DuckDuckGoose AI offer detection services using algorithms that analyse facial landmarks, motion consistency and spectral patterns.

However, these are most effective when analysing original, high-resolution content.

“After three or four compression cycles, the digital fingerprints that help us detect deepfakes are often lost. That makes platforms like TikTok and WhatsApp especially challenging environments for verification,” he added.

He also warned that synthetic audio and voice-cloning technologies are increasingly being exploited in Malaysian fraud cases, with the sophistication of these threats rising at an alarming pace.

From a technical standpoint, the barriers to voice cloning have crumbled where modern AI voice synthesis can generate convincing clones using as little as 30 seconds of recorded speech.

“This accessibility has democratised voice cloning for criminal purposes, shifting it from the exclusive domain of state-level actors to tools now easily available to petty criminals,” he said.

He added that criminal syndicates now use automated systems to extract voice samples from social media, video calls and phone recordings to quickly generate cloned voices.

Some operations even maintain databases of voice profiles, specifically targeting high-value individuals or those with a strong social media presence.

Looking ahead, he said that voice-based threats are likely to become even more advanced, incorporating emotional nuance, more accurate accent replication and potentially real-time language translation.

Call for public awareness and regulations

Technology expert and CEO of local IoT company Favoriot Sdn Bhd Dr Mazlan Abbas believes that while detection tools are important, the best defence lies in public awareness and stronger regulation.

Despite the increasing prevalence of such content, he said Malaysia currently has no specific legislation addressing deepfakes.

“Enforcement agencies are still relying on existing laws such as the Communications and Multimedia Act to investigate malicious content or scams.

“But frankly, the technology is moving too fast for us to keep relying on old frameworks alone. We need regulations that are fit for this new digital era,” he said.

When asked whether AI literacy and deepfake awareness should be formally introduced into the national education curriculum, he said it is crucial to start equipping the younger generation for the realities of an AI-driven future.

“We need to prepare our young people for this AI-driven world. Teaching deepfake awareness, digital ethics and AI literacy in schools will equip them with the critical thinking skills to question, verify and navigate the content they encounter online,” he said.

However, he cautioned that education efforts should not overlook older generations, as they are among the most vulnerable targets for scammers using deepfakes.

* Coming up in Part 2: Real voices, real faces — all faked. We break down how AI-generated scams are hitting Malaysians hard, from cloned boss calls to deepfake videos featuring politicians.